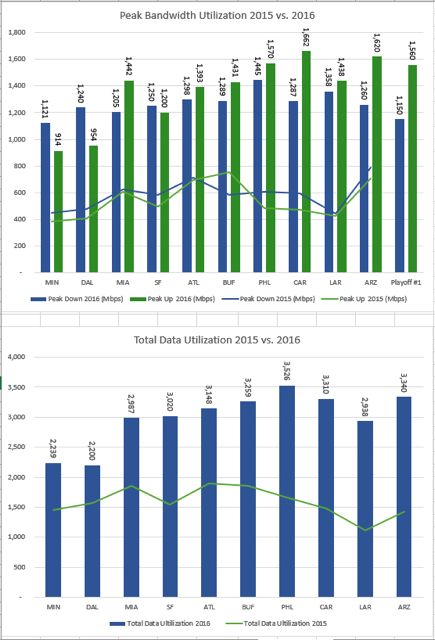

We finally have numbers for the Wi-Fi usage at the most recent College Football Playoff championship game, and in somewhat of a first the total data used during the event was much lower than the previous year’s game, with just 2.4 terabytes of data used on Jan. 9 at Raymond James Stadium in Tampa, Fla. — compared to 4.9 TB of Wi-Fi used at the championship game in 2016, held at the University of Phoenix Stadium in Glendale, Ariz.

We finally have numbers for the Wi-Fi usage at the most recent College Football Playoff championship game, and in somewhat of a first the total data used during the event was much lower than the previous year’s game, with just 2.4 terabytes of data used on Jan. 9 at Raymond James Stadium in Tampa, Fla. — compared to 4.9 TB of Wi-Fi used at the championship game in 2016, held at the University of Phoenix Stadium in Glendale, Ariz.

The reason for the decline is probably not due to any sudden dropoff in user demand, since usage of in-stadium cellular or DAS networks increased from 2016 to 2017, with AT&T’s observed network usage doubling from 1.9 TB to 3.8 TB in Tampa. More likely the dropoff is due to the fact that the Wi-Fi network at the University of Phoenix Stadium had been through recent upgrades to prepare for both the college championship game and Super Bowl XLIX, while the network in Raymond James Stadium hasn’t seen a significant upgrade since 2010, according to stadium officials. At last check, the Wi-Fi network at University of Phoenix Stadium had more than 750 APs installed.

Joey Jones, network engineer/information security for the Tampa Bay Buccaneers, said the Wi-Fi network currently in use at Raymond James Stadium has a total of 325 Cisco Wi-Fi APs, with 130 of those in the bowl seating areas. The design is all overhead placements, Jones said in an email discussion, with no under-seat or handrail enclosure placements. The total unique number of Wi-Fi users for the college playoff game was 11,671, with a peak concurrent connection of 7,353 users, Jones said.

Still tops among college playoff championship games in Wi-Fi is the first one held at AT&T Stadium in 2015, where 4.93 TB of Wi-Fi was used. Next year’s championship game is scheduled to be held at the new Mercedes-Benz Stadium in Atlanta, where one of the latest Wi-Fi networks should be in place and operational.

The wireless networking business once known as Ruckus Wireless is finding a new home, as Arris announced today that it plans to buy Ruckus from current owner Brocade as part of an $800 million deal that also includes Brocade’s ICX switch business.

The wireless networking business once known as Ruckus Wireless is finding a new home, as Arris announced today that it plans to buy Ruckus from current owner Brocade as part of an $800 million deal that also includes Brocade’s ICX switch business.