If I had to guess, I would bet that our news that Texas A&M’s new optical-based stadium network cost “north of $20 million” to build will be one of the most talked-about things in the stadium technology world for the near future. While some may ask “who really has that kind of money to spend” on a stadium network, I think there is an equal question in the opposite direction: Can you afford not to spend that much (or at least as much as you can) to make your network as good and future-proof as it can be?

If I had to guess, I would bet that our news that Texas A&M’s new optical-based stadium network cost “north of $20 million” to build will be one of the most talked-about things in the stadium technology world for the near future. While some may ask “who really has that kind of money to spend” on a stadium network, I think there is an equal question in the opposite direction: Can you afford not to spend that much (or at least as much as you can) to make your network as good and future-proof as it can be?

Our cover story about the new deployment at A&M’s Kyle Field in our latest STADIUM TECH REPORT (which you can download for free) may be somewhat of an outlier since Texas A&M is clearly one of the subset of universities and colleges that doesn’t really have the budgetary concerns that others might. Yet it’s also instructive to look around at Texas A&M’s peers at the big-time college football level to see how few of them have even started down the road toward a top-level stadium network.

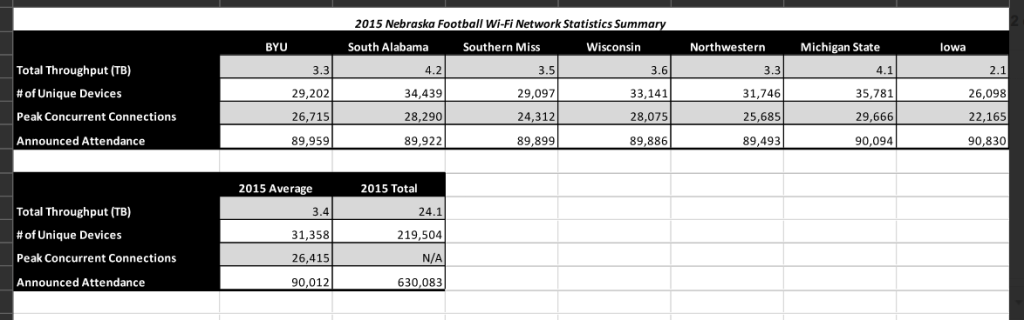

Some schools with “big” football programs (which regularly attract large, sellout crowds and have plenty of income on hand) have certainly built great networks of their own, including schools we’ve profiled, like Wisconsin, Nebraska, Baylor and more. But there are still many more schools, even those with successful, money- making operations, who still haven’t put high- speed wireless networks into their venues. The biggest question may be for them, and it is: How much longer will your fans put up with the feared “no signal” problem? Especially as the kids of today become potential ticket-buying alums that you count on for the future?

It’s not about watching the phone at the game

To be sure, we still don’t think that anyone – anyone – goes to a sporting event to stare at their phone. There is still so much to the live game-day experience, the smells, sounds and tribal fun, that it will always outweigh whatever entertainment or pleasure one might derive from their mobile device.

That being said, it’s also true that our society has already become one that is used to being able to connect everywhere; that’s especially so when we’re in public and social situations, where the ability to stay in touch facilitates not only face-to-face meetings (meet you there!) but also enables us to stay close to others who can’t physically be with us (wish you were here!).Time and time again, when we profile venues that have installed new wireless networks, we ask about the reasons behind the deployment – and almost always, fans complaining about not being able to connect is one of the top woes. Before the stadium refurbishment at Texas A&M, chancellor John Sharp’s office was “flooded” with emails after every home game, complaining about two things in particular: The lack of women’s restrooms, and the terrible cellular reception. They’re both plumbing problems, but some people still don’t seem to see the urgency to solve the second kind, the one that uses “pipes” to connect phones.

For the near future, it may be easy to ignore the problem and say it’s not a priority, that fans come to watch the games, not their phones. But ignoring the reality of the need for people to stay connected seems a bad way to treat paying customers; and every day your venue doesn’t have a network is another day lost in the possible pursuit of a closer relationship with ticket-buyers, and the potential digital-supported revenue ideas that are just starting to emerge.

While we’re guessing that not every institution can support a $20 million network (even if the wireless carriers are paying half the cost), there are many other ways to skin this cat, as other profiles in our most recent STADIUM TECH REPORT issue point out. By partnering with Boingo, Kansas State was able to get both a DAS and a Wi-Fi network built; and at Ole Miss, a partnership with C Spire got a Wi-Fi network deployed at Vaught-Hemingway Stadium, albeit one where non-C Spire customers have to pay a small fee ($4.99 per game) to use it.

Maybe charging a small fee isn’t the ideal situation, but it’s better than no network at all, especially if you want to attend a game but still want to remain somewhat connected to the outside world. And we haven’t even mentioned the public safety aspects of ensuring you have adequate cellular and/or Wi-Fi coverage in your venue, which might prove indispensible in times of emergency.

And even at stadiums we’ve been to where there is advanced cellular and Wi-Fi inside the venue itself, there is often poor or no connectivity outside. At Texas A&M, we heard tales of some 30,000 people who remained in the tailgating lots during the game, never wanting to come inside. While not all schools may have that kind of be-there fervor, the idea of an “event city” is taking shape at many venues pro and collegiate.

At the University of Phoenix Stadium in Glendale, Ariz., for example, a Crown Castle DAS brings connectivity to the extensive mall/restaurant area surrounding the football stadium and hockey arena; both the Green Bay Packers and the Chicago Cubs are planning outside-the-wall fan areas that will have Wi-Fi and DAS coverage to keep people connected on the way to or from the games. For many venues, outside is now as important as inside when it comes to wireless coverage.

So the question is, should your institution spend the necessary money to put great networks into your most public places, or is connectivity still a luxury your venue can’t afford? We’ll admit we don’t know all the answers to those twin questions, but if you have a story or opinion one way or the other we’re ready to help you tell your tale. Let’s hear from more of you, so that everyone can learn.