Big Bird greets all visitors to Mercedes-Benz Stadium. Credit all photos: Paul Kapustka, MSR (click on any photo for a larger image)

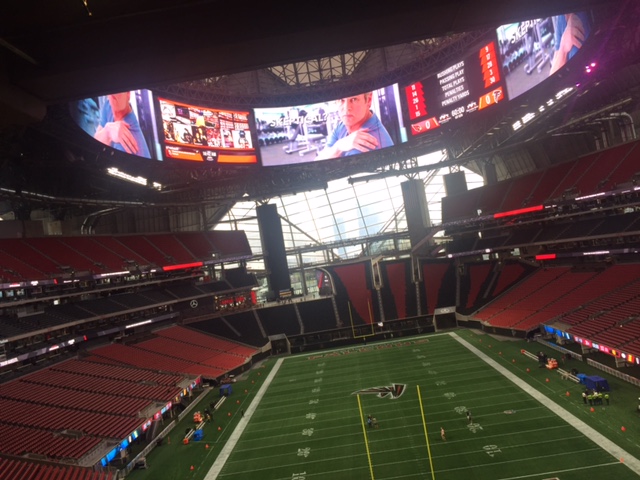

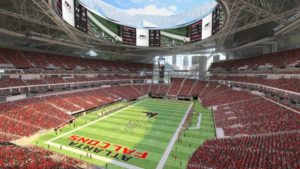

Overalll first impressions, technology wise — this is another well thought out venue specifically from a technology standpoint but also mainly just from a visual feel. The halo board is as impressive as advertised, though we would want to see it in action during a game (while sitting in a seat) to fully judge whether or not it fits in with the flow of an event. For advertisers it’s a wonder, as watching all the video screens in the house go to a synchronized ad video was a big wow factor.

Since much of the stadium interior is unfinished concrete, there wasn’t much of an effort to hide networking components — but given all the other piping and cabling, the equipment does kind of fade out of sight in plain view.

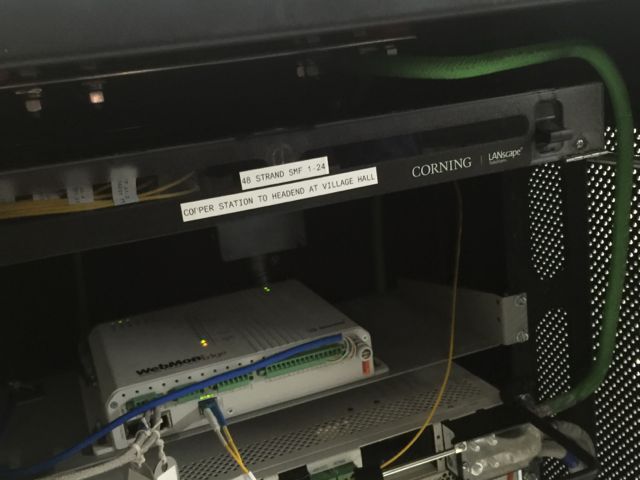

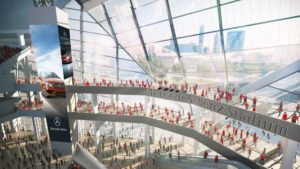

It’s our educated guess that the AT&T Porch — a wide open gathering area in the end zone opposite the windows toward downtown — is going to be a popular hangout, since you can see the field and have multiple big screen TV options behind you. We also liked the “technology loge suites,” smaller four-person private areas just off the main concourse with their own small TV screens and wireless device charging.On the app side of things, it’s fair to say that features will iterate over time — both the wayfinding and the food-ordering options are not wirelessly connected yet, but according to IBM beacons are a possible future addition to the mix. And while Mercedes-Benz Stadium is going to all-digital ticketing, season ticket holders will most likely use RFID cards on lanyards instead of mobile phone tickets simply because the RFID is a quicker option. The ticket scanners are by SkiData, fiber backbone by Corning, Wi-Fi APs by Aruba, and DAS by Corning and a mix of antenna providers.

Like we said, more soon! But enjoy these photos today, ahead of the first event on Aug. 26.

The view inside the main entry, with halo board visible above

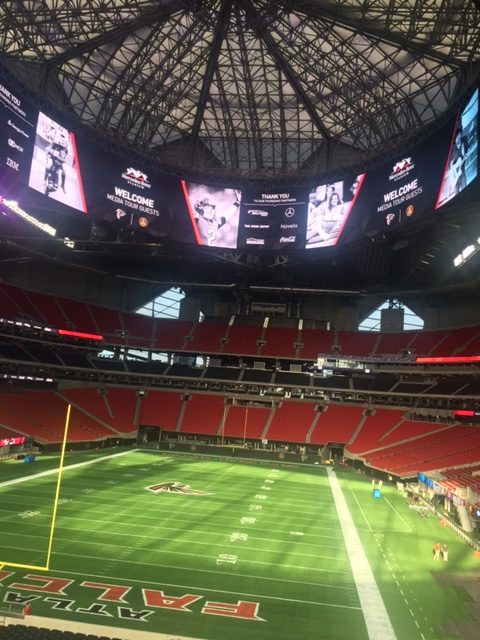

The view from the other side of the field, from the AT&T Porch

Just hard to fit all this in, but you can see here from field to roof

I spy Wi-Fi, APs point down from seat bottoms to main entry concourse

One of the many under-seat APs

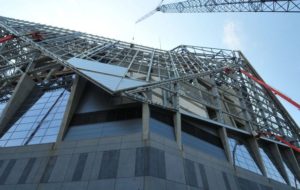

A good look at the roof: Eight “petals” that all pull straight out when open, which is supposed to take 7 minutes according to design

Good place for maximum coverage

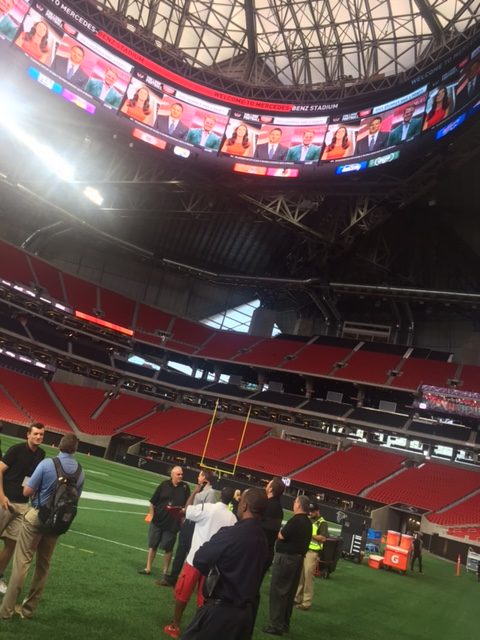

View from the field

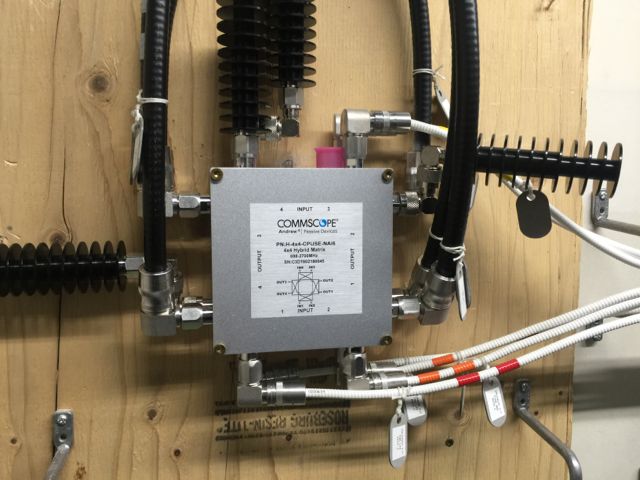

One of “hundreds” of mini-IDFs, termination points that bring fiber almost right to edge devices

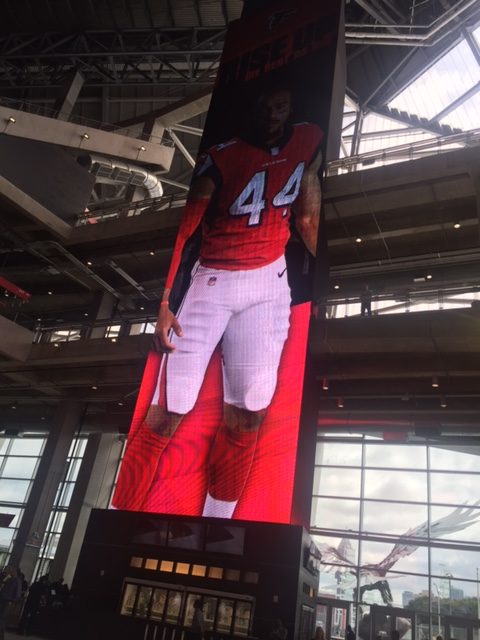

The mega-vertical TV screen, just inside the main entry. 101 feet tall!

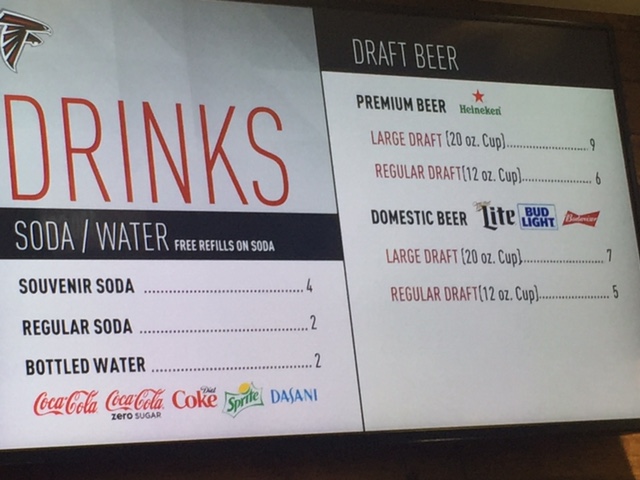

Something Falcons fans may like the most: Look at the prices!

MORE SOON!