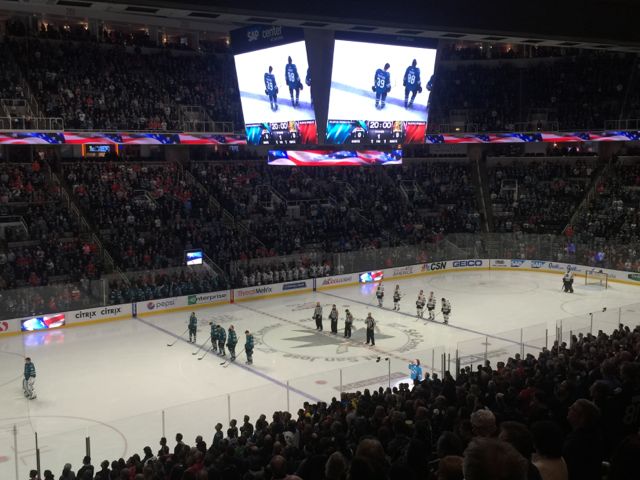

Nuggets vs. Oklahoma City Thunder at Denver’s Pepsi Center, April 9, 2017. Credit all photos: Paul Kapustka, MSR (click on any photo for a larger image)

With speed tests showing download speeds of almost 70 Mbps in one spot on the concourse and solid, high-teens numbers in upper deck seats, the Avaya-built public Wi-Fi network allowed us to stay connected at all times. We even watched live video of The Masters golf tournament online while watching Oklahoma City beat Denver in a heartbreaking ending for the Nuggets’ home season, when Thunder star Russell Westbrook capped a 50-point performance with a long 3-pointer that won the game and eliminated Denver from playoff contention.

While we got good speed tests last summer when we toured an empty Pepsi Center, we had no idea how the network would perform under live, full-house conditions, but the Nuggets’ home closer gave us some proof points that the Wi-Fi was working fine. One test on the concourse (in full view of some overhead APs) checked in at 69.47 Mbps for download and 60.96 for upload; another concourse test on the upper deck got numbers of 37.18 / 38.30.

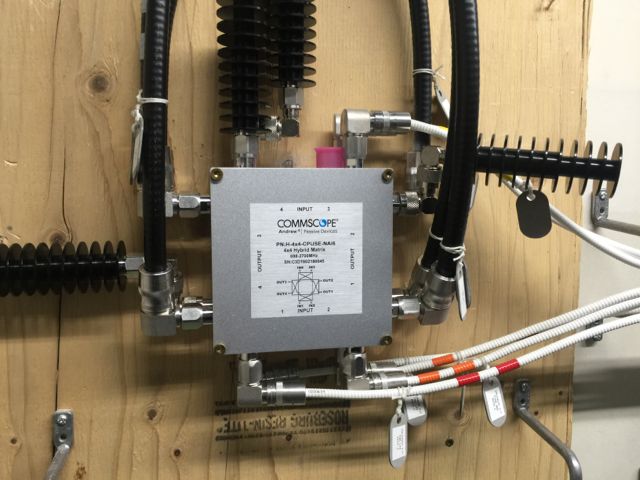

In our MSR-budget upper-deck seats (we did not request media access to the game but instead bought tickets like any other fan) we still got solid Wi-Fi numbers, with one test at 15.04 Mbps / 21.44 Mbps and another in the same spot at 17.40 / 16.27. We didn’t see any APs under the seats — according to the Pepsi Center IT staff some of the bowl seats are served by APs shooting up through the concrete (see picture for one possible such location). Looking up we did see some APs hanging from the roof rafters, so perhaps it’s a bit of both.What’s unclear going forward is who will supply the network for any upgrades, since Avaya is in the process of selling its networking business to Extreme Networks, which has its own Wi-Fi gear and a big stadium network business. For now, it seems like attendees at Nuggets, Avalanche and other Pepsi Center events are covered when it comes to connectivity. Better defense against Westbrook, however, will have to wait until next season.

Upper level concourse APs at Pepsi Center; are these shooting up through the concrete?

Even at the 300 seating level, you have a good view of the court.

Taking the RTD express bus from Boulder is a convenient if crowded option (there was also a Rockies game that day at nearby Coors Field, making the bus trips SRO in both directions)

Who knew Pepsi was found inside mountains? (this photo taken last summer)