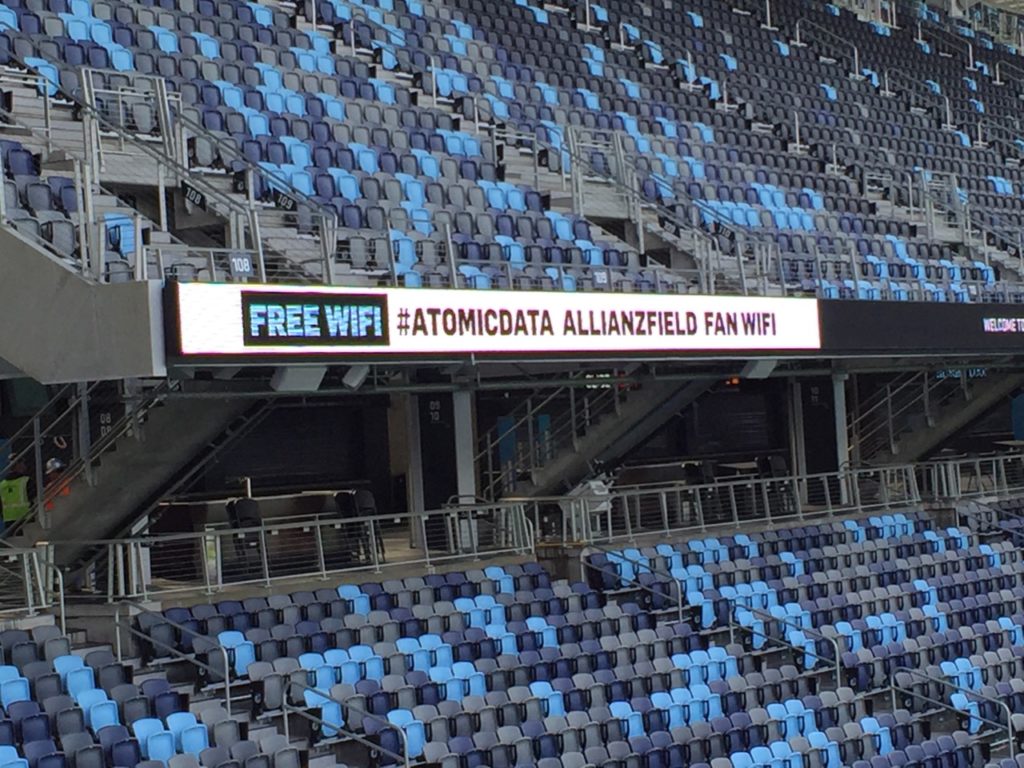

One of the Cisco Wi-Fi APs installed by Atomic Data inside the new Allianz Field in Minneapolis. Credit: Paul Kapustka, MSR (click on any picture for a larger image)

With 19,796 fans on hand on April 13 to pack the $250 million venue, Atomic Data said it saw 6,968 unique Wi-Fi device connections, a 35 percent take rate. The Allianz Field Wi-Fi network uses Cisco gear with 480 Wi-Fi APs installed throughout the venue. Approximately 250 of those are located in the seating bowl, with many installed under-seat. The stadium also has a neutral-host DAS built by Mobilitie, though none of the wireless carriers are currently online yet. (Look for an in-depth profile of the Allianz Field network in our upcoming Summer STADIUM TECH REPORT issue!)

According to the Atomic Data figures, the stadium’s Wi-Fi network saw peak Wi-Fi bandwidth usage of 1.9 Gbps; of the 85 GB Wi-Fi data total, download traffic was 38.7 GB and upload traffic was 46.3 GB. Enjoy some photos from the opening game (courtesy of MNUFC) and a couple from our pre-opening stadium tour!

The game was opened with a helicopter fly-by

A look at the standing-area supporter end zone topped by the big Daktronics display

The traditional soccer scarves were handy for the 40-degree temperatures

A view toward the field through the brew house window

The main pitch gets its opening salute

Entry ways were well covered with Wi-Fi to power the all-digital ticketing

The Loons have a roost!

The view as you approach the stadium crossing I-94

One of the under-seat Wi-Fi AP deployments

Message boards let fans know how to connect